Facepalm: According to Apple's new guidelines, apps intended to operate on visionOS should be labeled as "spatial computing" experiences rather than "virtual reality" apps. The submission guidelines for Vision Pro apps specify that developers should refrain from describing their apps as augmented reality (AR), virtual reality (VR), extended reality (XR), or mixed reality (MR) apps, even if that's their intended functionality.

The Vision Pro headset is set to launch on February 2 in the US, and Apple has released detailed app development requirements. Developers must thoroughly introduce and describe their creations before submitting their code to the device's store. The guidelines emphasize that apps created for iPhone and iPad devices can run on Apple Vision Pro without modification, making it easy for developers to extend compatibility to this new platform.

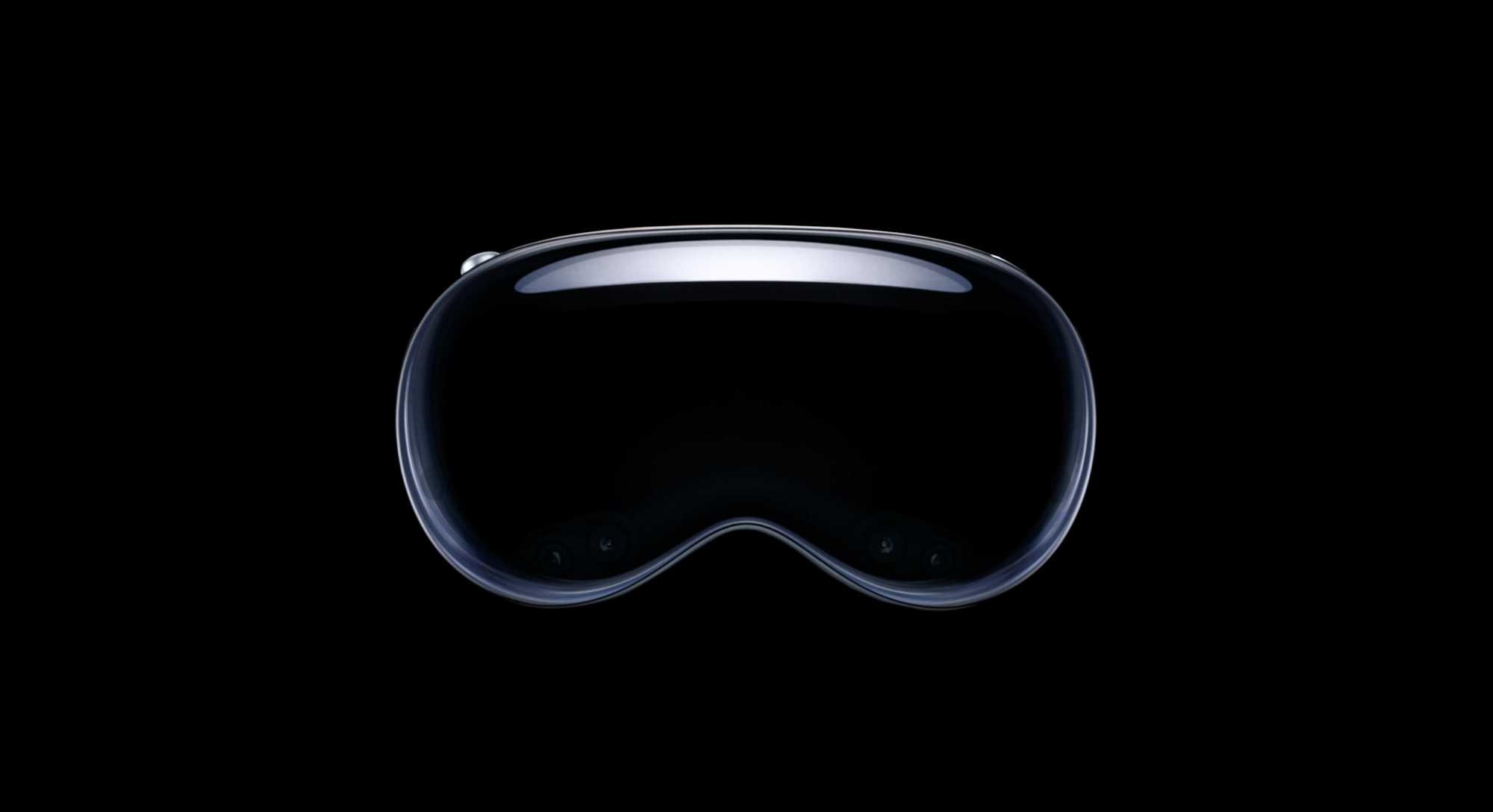

Apple seems to be introducing a novel concept with Vision Pro, its high-end AR/VR headset available for pre-order starting January 19 at a cost of $3,500. The device operates on the visionOS operating system, a specially tailored version of iOS that appears to maintain full compatibility with iPadOS and iOS apps.

When mentioning the new OS, developers – and likely anyone else – should consistently refer to it as "visionOS" with a lowercase 'v,' even when it's the first word in a sentence.

Apple's requirements for Vision Pro's OS and app descriptions outline marketing principles that may be easily overlooked by end users. The visionOS submission page introduces intriguing guidelines for spatial computing apps, particularly in the new entertainment category, emphasizing the need to convey accurate "motion information."

In cases where an app involves movements like quick turns or sudden changes in the camera perspective, developers are instructed to indicate this in App Store Connect. The app's product page will then feature a badge, alerting users who may be sensitive to visually intense experiences.

VisionOS apps are also mandated to include specific labels for privacy-sensitive elements. The "Surroundings" label encompasses environment scanning, scene classification, and image detection, while "Body" covers hands and head. Any scanning practices carried out by third-party code within an app must also be declared.

Developers can leverage the visionOS SDK in Xcode 15.2 to create their spatial computing experiences tailored for the Vision Pro headset. Apple highlights the "unique" and immersive capabilities of visionOS in additional development resources, emphasizing that existing apps can be upgraded to fully exploit the platform-specific capabilities.