Founded in 1993, Nvidia is a youngster when compared to other semiconductor companies. However, its impact on the world of technology has been vast. According to the Steam Hardware Survey, over 70 percent of users have an Nvidia graphics card in their gaming system, while Passmark says over 60 percent of users have an Nvidia product.

There was a lot of work involved in getting to that level, and while the company's first chip in 1995, the NV1 seemed like a brilliant product offering video and 3D acceleration, a built-in sound card, and a gamepad port, its reliance on quadratic texture mapping instead of triangle polygon rendering meant that Nvidia dropped the ball on its big debut. Lesson learned as the chips that followed included some surefire hits. Let's take a look...

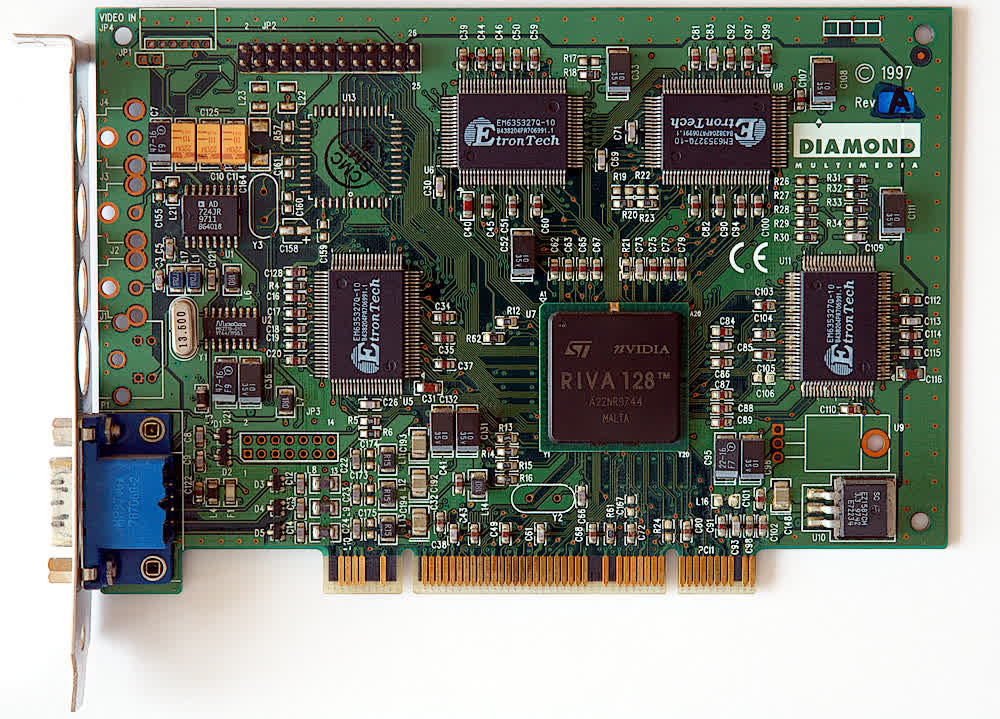

Nvidia RIVA 128

Image: André Karwath

An acronym for Real-time Interactive Video and Animation accelerator, the RIVA 128 was the successor to the NV1 and a product that put everyone in the space on notice. Featuring support for DirectX 5 and OpenGL, the RIVA 128 didn't repeat the same mistakes. It seemed future proof from the get-go, with 4 MB of SGRAM and AGP 2X support.

At the time, it could keep up with 3Dfx Voodoo cards, which were the fastest cards on the market. Over a million units shipped within the first 4 months of the RIVA 128 release, cementing its importance as a product and a company.

Nvidia RIVA TNT2

The successor to the RIVA 128, the TNT was good but was still outperformed by 3Dfx. But the following year, Nvidia delivered the TNT2 with several significant improvements. A process shrink to 250 nm (and later 220 nm with the TNT2 Pro) allowed for higher clock speeds (125 MHz core and 150 MHz on the memory, compared to 90/110 on the original TNT), while the chip supported larger textures, a 32-bit Z-buffer, AGP 4X and a maximum of 32 MB of memory.

Compared to rival products from 3Dfx, the TNT2 featured better support for DirectX and OpenGL and packed excellent image quality with 32-bit color. Gamers took notice.

Nvidia GeForce 256 DDR

In 1999, Nvidia introduced the first GeForce chip, known as the GeForce 256. There were two variants, with the high-end DDR model gaining most of the attention for its speedy memory.

The GeForce 256 also featured a transformation and lighting engine (T&L) that allowed the chip to handle calculations previously covered by the CPU. This feature allowed the card to be paired with a slower processor yet still deliver excellent performance. While critics and rivals said the technology wasn't necessary, it eventually became commonplace and found in nearly every graphics card on the market.

The GeForce 256 was also the basis for the first Quadro product for computer-aided design. This line of products opened up a whole new and lucrative market for Nvidia.

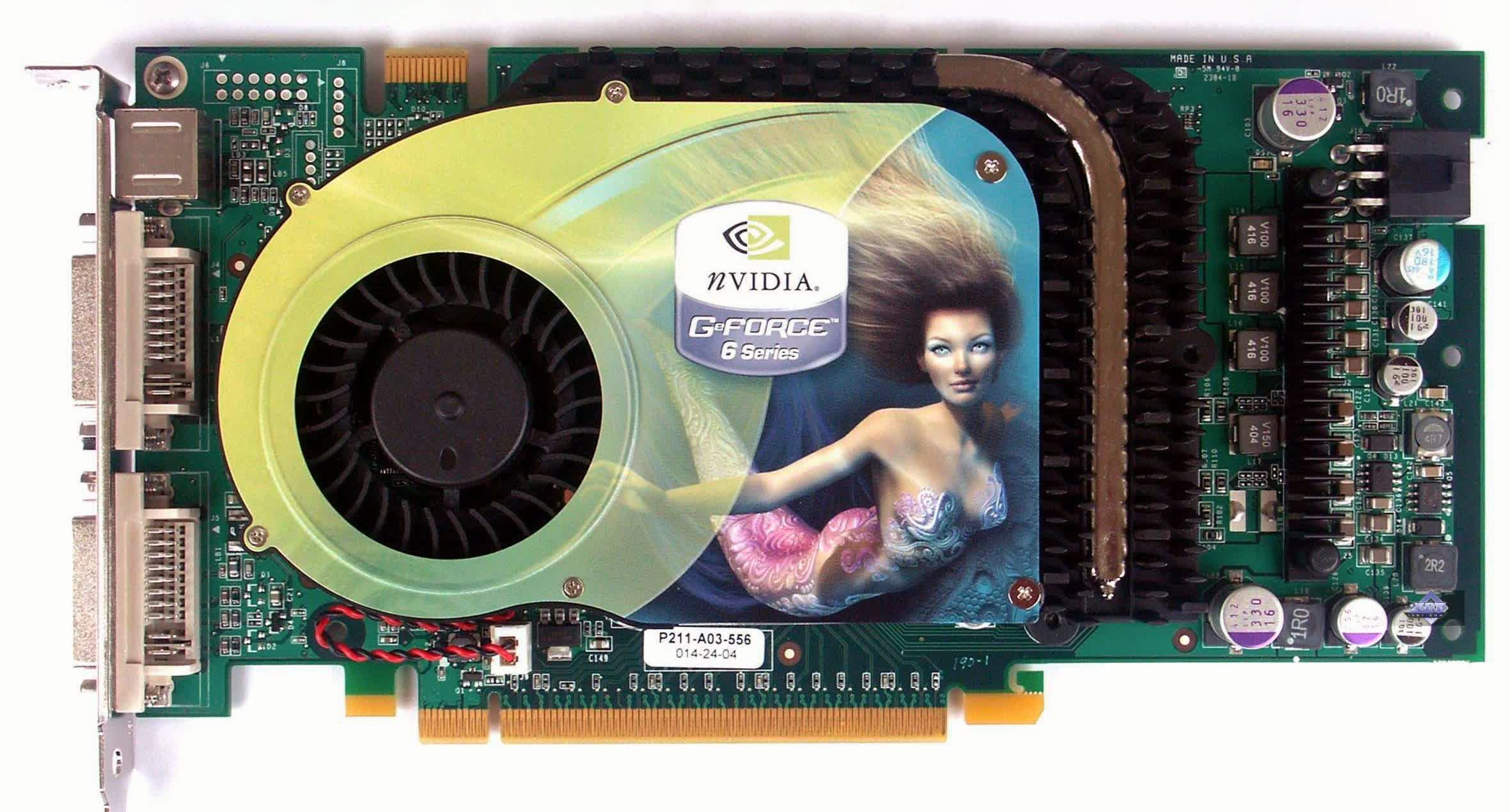

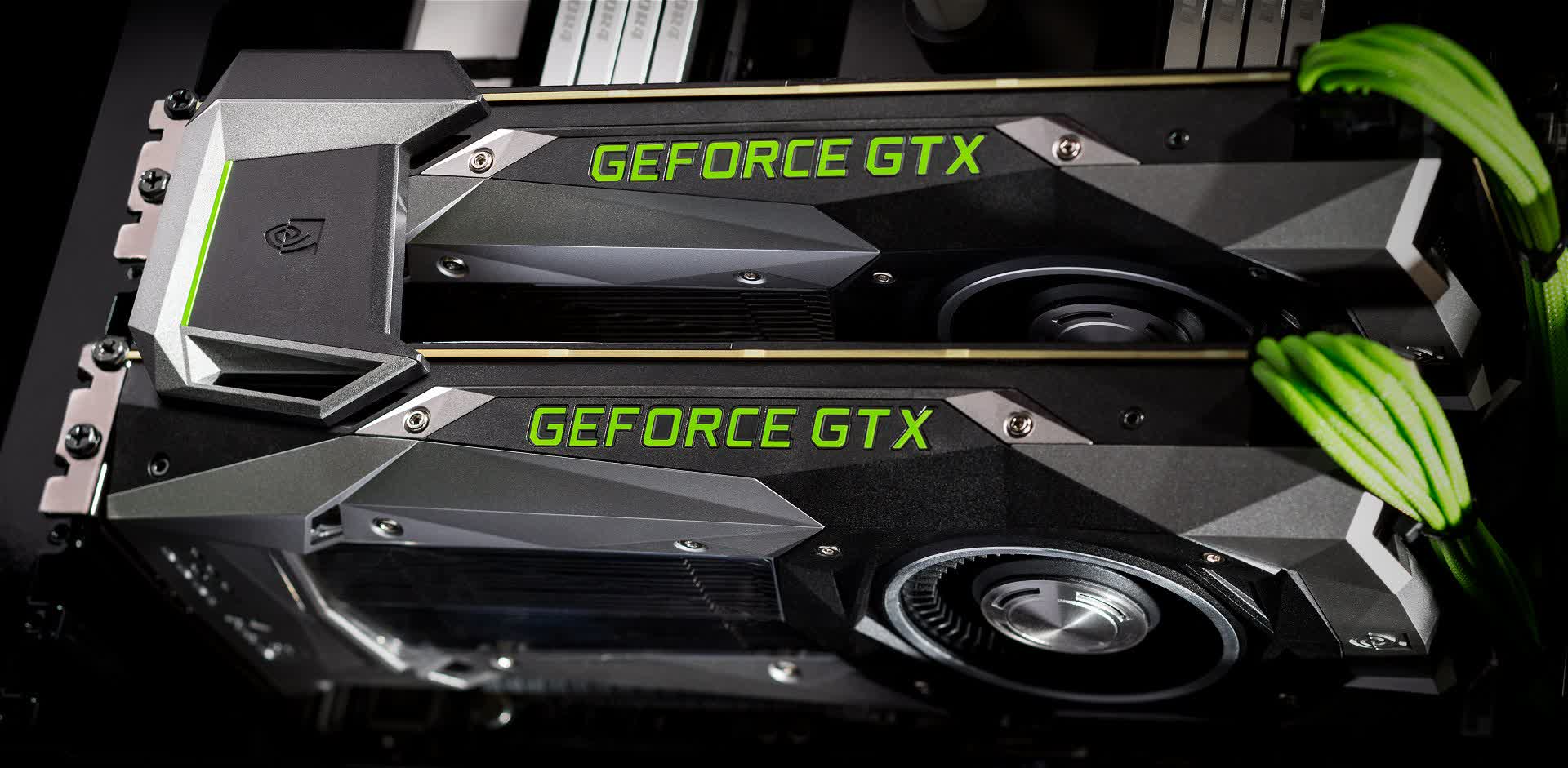

Nvidia GeForce 6800 series

The early 2000s turned into a royal rumble between ATI and Nvidia, as they one-upped each other through the GeForce 2, 3, and 4 eras. After ATI dropped their Radeon 9700 and impressed everyone, Nvidia had to throw a haymaker of its own, and thus the GeForce 6800 was introduced.

As one of the early products to sport GDDR3 memory, the GeForce 6800 was a speed demon, even dismissing ATI's X800 XT with better shader model support and 32-bit float point precision, compared to the Radeon. Furthermore, since Nvidia had acquired 3Dfx, they began implementing features and technology from the former graphics company.

One major part of 3Dfx's portfolio was Scan-Line Interleave, which linked two video cards and boosted 3D processing power available. In 2004, Nvidia modernized this feature and reintroduced it as Scalable Link Interface (SLI) with many enthusiasts pairing 6800s to create the ultimate gaming PC.

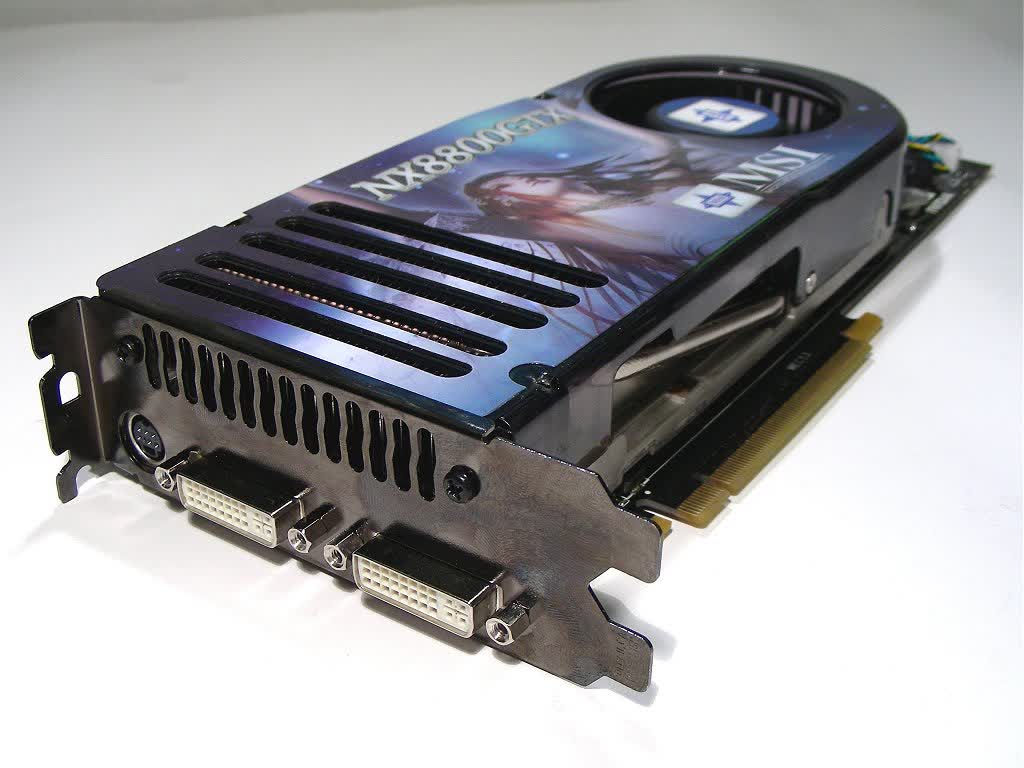

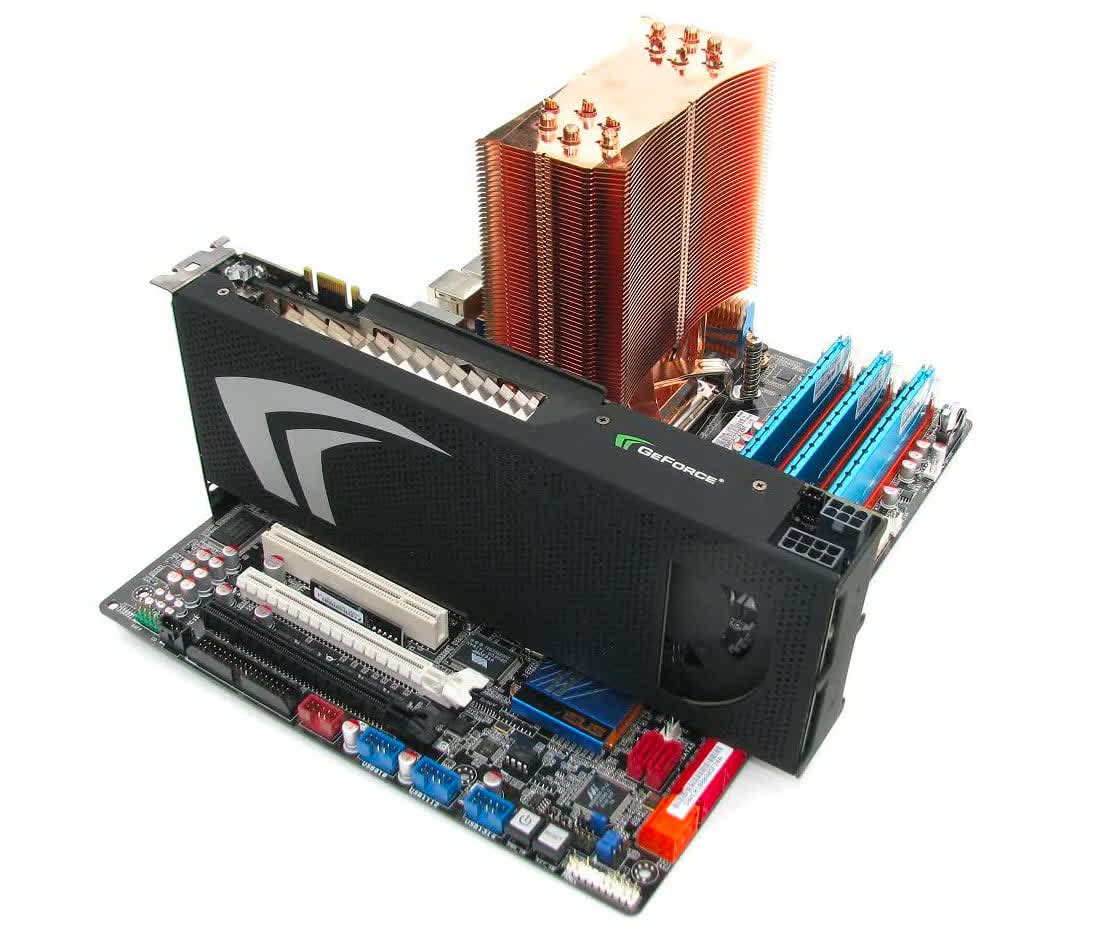

Nvidia GeForce 8800 series

Two years after the GeForce 6800, Nvidia introduced the new GeForce 8800 series. The new graphics card was an absolute beast of a gaming GPU. With up to 128 streaming processors, a 575 MHz core, a 1.35 GHz shader clock, and 768 MB of GDDR3, it could actually outpace a dual GPU flagship from the previous generation.

It was one of the first products to use a unified shader architecture. The GeForce 8 series was considered fast even years after its initial debut. Not everything was gravy, as a significant number of this series' chips suffered from an overheating issue that led to many failures.

Nvidia GeForce GTX 295

Just before the 2010s came around, dual-GPU boards became a hot commodity, and AMD was pretty aggressive with its ATI Radeon 4870 x2. To rival that, Nvidia brought out the GeForce GTX 295, which put two of its GeForce 200 chips on a single board.

With the right drivers, this was the fastest graphics card money could buy, and at $500 it could outperform a pair of GTX 260s when a single one had an MSRP of $450, making it a bargain to enthusiasts.

Nvidia GeForce GTX 580

While the GeForce GTX 480 launch didn't go as planned, all was well with its successor known as the GTX 580. A fully unleashed Fermi architecture meant 16 stream multiprocessor clusters, six active 64-bit memory controllers, and full-speed FP16 filtering.

This graphics card was the real deal. With AMD hot on its heels, the GTX 580 needed to be everything the GTX 480 wasn't, and fortunately it was enough to hold off AMD for a while. The top-tier dual-GPU GTX 590 was met with scathing reviews due to poor stability and the inability to upstage the Radeon HD 6990.

Nvidia Tegra X1 SoC

Nvidia had been making products for PC enthusiasts, design professionals, and even supercomputers and AI researchers, but with Tegra SoCs, it made the jump into the world of mobile computing. The Arm-based Tegra lineup was designed for smartphones and tablets, but things went beyond that, and the extra processing power of the SoC went to good use in the Nintendo Switch.

The success of the Switch has been off-the-charts, with nearly 70 million units sold since 2017. Each one packs the Tegra X1, which pairs an ARMv8 ARM Cortex-A57 quad-core with a Maxwell-based 256 core GPU.

Nvidia GeForce GTX 1080

The 16nm fabricated GeForce GTX 1080 series was extremely influential and a big milestone for the GPU maker, showcasing Nvidia's might and design expertise. The Pascal architecture was well ahead of the competition, so much so that GeForce GTX 10 cards remained in contention for several years which is almost an anomaly in the graphics market.

The GTX 1080 became the benchmark to beat, and even the next generation of Nvidia GPUs struggled to show any significant improvement in raster performance. Speedy GDDR5X memory was matched with 2560 cores in the GTX 1080 and 3584 cores in the GTX 1080 Ti, leading to eye-popping frame rates even as you increased resolutions.

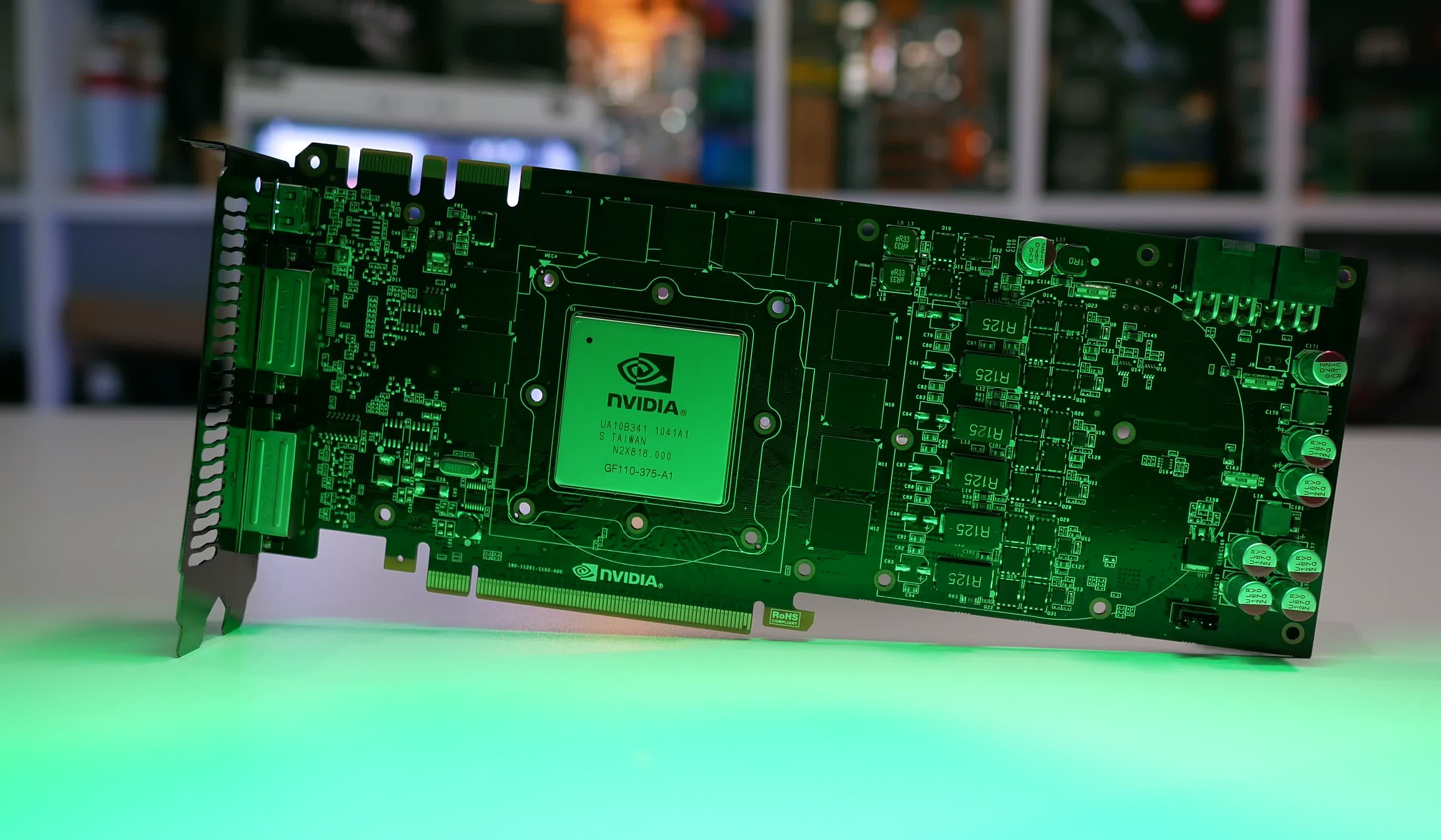

Nvidia GeForce RTX 3080

The GTX 1080 was followed by the Turing-powered RTX 20 series, which implemented real-time hardware ray tracing for the first time in a consumer-oriented video card. However, there were very few games with ray tracing features at the time of its release, and in general, the RTX 20 series didn't feel like a significant enough jump from the GTX 10 series.

Nvidia upped the ante with the RTX 30 series and the Ampere architecture, leading the RTX 3080 to become the ultimate gaming card regardless of resolution or enabling demanding ray tracing features when using DLSS. The 3080 has 28.3 billion transistors and 8704 cores clocked up to 1.7 GHz, delivering a big 70% performance jump over the RTX 2080 at 4K is impressive.

On the less positive side, the card features just 10GB of memory, although it's of the faster GDDR6X kind. It's also power hungry, but it's very fast and that makes up for all of it. At the original $700 MSRP (which due to the chip shortages that ensued would be a dream to have at that price), it could be considered a bargain to enthusiasts, thanks to a low cost per frame.

Wrap Up

Nvidia has grown into a graphics giant and beyond, by steadily churning out impressive and innovative products for computer gamers and enthusiasts, but most recently also providing solutions for automakers, creative studios, and researchers. Not to mention the impending (and somewhat doubtful?) acquisition of Arm.

These days, Nvidia is pushing the efforts of its real-time hardware ray tracing products, which allow for photorealistic lighting. However, even without these features, its GPUs are among the fastest performing in the industry. As we sit upon a new generation of gaming, with new consoles featuring ray tracing support, it'll be interesting to see if Nvidia can increase its lead over the competition.

25 years later: How many of these have you owned?

Also, don't miss our parallel feature showing off the Top 10 Most Significant AMD/ATI GPUs.