Something to look forward to: Google has finally launched Gemini, its most advanced AI model so far. The search giant ranks it better than GPT-4 in almost every benchmark tested, but perhaps we should hold off getting too excited until independent tests come out.

Google appears to have timed Gemini's launch perfectly as GPT-4 developer OpenAI is still recovering from internal struggles that saw CEO Sam Altman fired and rehired within a matter of days. This was probably unintentional, but advantageous nonetheless since OpenAI will need more than a minute to process and respond to the news.

Meanwhile, Google's PR hype train is running full-throttle, with the company releasing several videos on YouTube, X / Twitter, and a lengthy post to its blog. That's not to downplay the AI's capabilities because the demonstrations developers shared are impressive. Just keep in mind Google is a for-profit company and will display its products in the best possible light.

Seeing some qs on what Gemini *is* (beyond the zodiac :). Best way to understand Gemini's underlying amazing capabilities is to see them in action, take a look â¬Âï¸Â pic.twitter.com/OiCZSsOnCc

– Sundar Pichai (@sundarpichai) December 6, 2023

Disclaimers aside, Sundar Pichai's X post (above) is probably the best video demonstrating Gemini's abilities. In it, a Gemini-infused chatbot shows that it understands several types of input – primarily audio and visual in this example. However, Gemini is "multimodal," which means it can understand text, image, and video inputs.

For instance, it can accurately identify objects in pictures or videos, transcribe spoken words into text, and generate a coherent response to a complex query. It can distinguish between communication modes and reason out the meaning when numerous inputs are used simultaneously. Likewise, it can respond using multiple types of output.

The AI model comes in three sizes. Gemini Ultra is the most complex model geared mainly for data centers. Gemini Pro is ideal for scaling for specific jobs. Finally, Gemini Nano was designed for "on-device tasks." Case in point, Google has announced plans to integrate Gemini Nano into the Pixel 8 Pro.

Google's benchmarking can be somewhat challenging to understand unless you closely follow AI training and development. DeepMind CEO Demis Hassabis explained the more important ones in Google's blog.

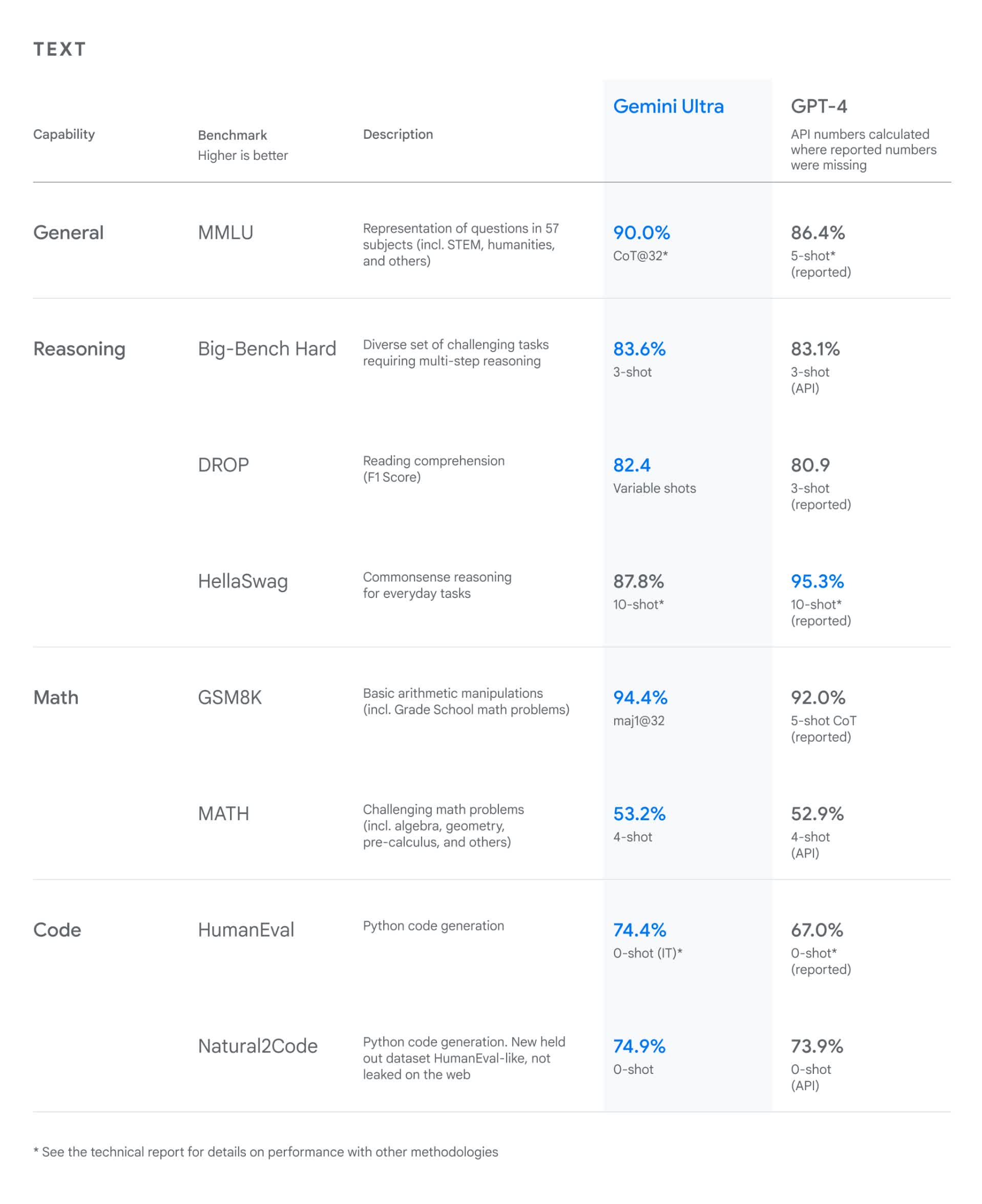

Gemini scored an industry-high 90 percent in the MMLU benchmark, which measures massive multitask language understanding in 57 subjects like math, physics, law, and ethics. Google claims this beats GPT-4's score of 86.4 percent. The benchmark uses only text input, but the high score indicates that Gemini has a superior understanding of language across various subjects, making it potentially more versatile and practical in diverse applications.

Hassabis also claims Gemini beats GPT-4 59.4 to 56.8 percent in the new MMMU (Massive Multidiscipline Multimodal Understanding and Reasoning) benchmark. This test measures the AI's skills at deliberate reasoning of "multidiscipline tasks with a college-level" understanding of the subject.

Developers listed 16 other benchmarks. "HellaSwag" (common sense reasoning for everyday tasks) was the only one that OpenAI's GPT-4 posted a higher score for (95.3 to 87.8 percent). Many of the other scores that show Gemini leading are so close as to be negligible.

Gemini has begun launching across a range of platforms. Google's Bard has already received Gemini Pro integration. It's the chatbot assistant's most significant update ever and is available in over 170 countries, but only in English. Google says more languages are in the pipeline.

As previously mentioned, it's adding Gemini Nano to the Pixel 8 Pro. The company also plans to integrate Gemini into its other products, including Search, Ads, Chrome, and Duet AI. A Gemini Pro API is coming for enterprise users on December 13.

Gemini Ultra is not available yet. Google is still performing trust and safety checks on its most complex model. Ultra should start rolling out to developers and enterprise customers for "early experimentation" in the first part of next year.